The Ethics of Artificial Intelligence: Examining Moral Responsibility in Autonomous Systems

Table Of Contents

Chapter ONE

1.1 Introduction 1.2 Background of Study

1.3 Problem Statement

1.4 Objective of Study

1.5 Limitation of Study

1.6 Scope of Study

1.7 Significance of Study

1.8 Structure of the Research

1.9 Definition of Terms

Chapter TWO

2.1 Overview of Artificial Intelligence Ethics 2.2 Historical Perspectives on Moral Responsibility

2.3 Theoretical Frameworks in AI Ethics

2.4 Ethical Dilemmas in Autonomous Systems

2.5 Case Studies on AI and Moral Agency

2.6 Ethical Decision-Making in AI Systems

2.7 Public Perception and Trust in AI

2.8 Ethical Governance and Regulation in AI

2.9 Ethical Implications of AI Bias

2.10 Future Trends in AI Ethics

Chapter THREE

3.1 Research Design and Approach 3.2 Data Collection Methods

3.3 Sampling Techniques

3.4 Ethical Considerations in Research

3.5 Data Analysis Procedures

3.6 Research Instrumentation

3.7 Validity and Reliability Measures

3.8 Limitations of Research Methodology

Chapter FOUR

4.1 Analysis of Data 4.2 Interpretation of Findings

4.3 Comparison with Existing Literature

4.4 Discussion on Ethical Implications

4.5 Recommendations for Future Research

4.6 Practical Applications of Research

4.7 Implications for Policy and Practice

4.8 Critical Reflections on Research Process

Chapter FIVE

5.1 Summary of Findings 5.2 Conclusion and Interpretation

5.3 Theoretical Contributions

5.4 Practical Implications

5.5 Recommendations for Further Research

5.6 Concluding Remarks

5.7 Reflections on the Research Journey

Project Abstract

AbstractArtificial intelligence (AI) technology has rapidly advanced in recent years, leading to the development of autonomous systems that have the capability to make decisions and act independently of human intervention. As these systems become more prevalent in various aspects of society, questions regarding the ethical implications and moral responsibility associated with their actions have become increasingly significant. This research project aims to explore the complex ethical issues surrounding AI technology, specifically focusing on the moral responsibility that arises when autonomous systems are entrusted with decision-making processes. The study begins with a comprehensive review of the existing literature on the ethics of AI, providing a background on the evolution of AI technology and its implications for society. The research identifies a critical gap in the literature regarding the moral responsibility of autonomous systems, prompting an in-depth investigation into this aspect of AI ethics. Through a qualitative research methodology, this study examines the ethical frameworks that govern human decision-making and assesses their applicability to autonomous systems. The research methodology involves interviews with experts in the field of AI ethics and case studies of real-world applications of autonomous systems to provide insights into the moral responsibility of these technologies. The findings of the research highlight the nuanced ethical considerations that arise when assigning moral responsibility to autonomous systems. The study reveals the limitations of current ethical frameworks in addressing the unique challenges posed by AI technology and proposes novel approaches to addressing these issues. The discussion of the research findings delves into the practical implications of the study, offering recommendations for policymakers, industry stakeholders, and ethicists on how to navigate the complex ethical landscape of AI technology. The study emphasizes the importance of developing robust ethical guidelines and regulatory frameworks to ensure that autonomous systems uphold moral standards and are held accountable for their actions. In conclusion, this research project contributes to the ongoing discourse on the ethics of AI technology by shedding light on the moral responsibility of autonomous systems. By critically examining the ethical implications of AI decision-making, this study aims to inform future developments in AI technology and promote responsible innovation in the field.

Project Overview

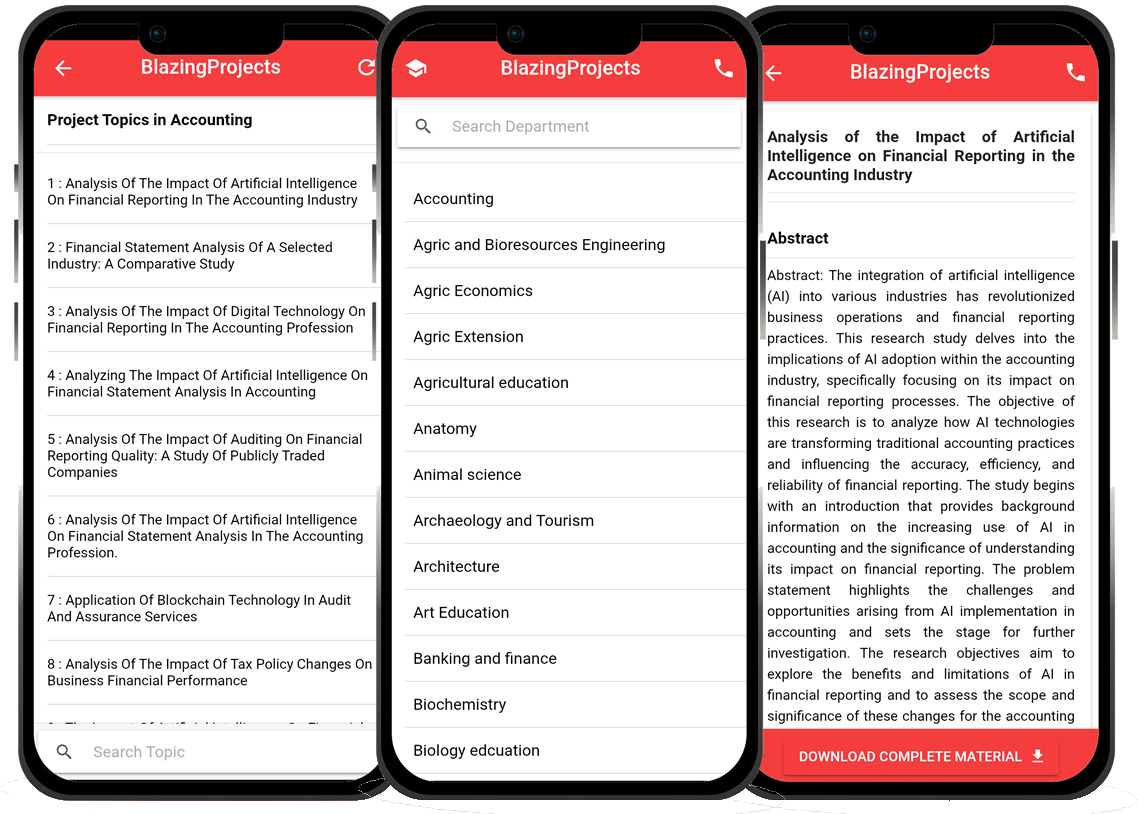

The project topic, "The Ethics of Artificial Intelligence: Examining Moral Responsibility in Autonomous Systems," delves into the complex intersection of moral philosophy and technology, particularly in the context of autonomous systems. As artificial intelligence (AI) continues to advance and permeate various aspects of society, questions surrounding the ethical implications of AI systems and the moral responsibility associated with their actions have become increasingly pertinent. This research aims to critically analyze the ethical considerations inherent in the development, deployment, and use of AI technologies that operate autonomously, without direct human intervention. The overarching objective of this research is to investigate the ethical challenges posed by autonomous AI systems and to explore the concept of moral responsibility in the context of these technologies. By examining key ethical theories and principles, such as consequentialism, deontology, and virtue ethics, this study seeks to provide a comprehensive framework for evaluating the ethical implications of autonomous AI systems. Furthermore, the research will consider the impact of AI decision-making on individuals, society, and the environment, with a focus on issues such as accountability, transparency, fairness, and bias in AI algorithms. Through a thorough review of existing literature on AI ethics, moral philosophy, and technology ethics, this research will identify key themes, debates, and gaps in current scholarship related to the ethical dimensions of autonomous systems. Drawing on interdisciplinary perspectives from philosophy, computer science, and social sciences, the study will offer a nuanced analysis of the moral challenges posed by AI technologies and the implications for human agency, autonomy, and accountability in an increasingly AI-driven world. The methodology employed in this research will involve a combination of qualitative and quantitative approaches, including literature review, case studies, ethical analysis, and empirical data collection. By engaging with experts in the field of AI ethics and conducting interviews with stakeholders from diverse backgrounds, this study aims to generate insights that can inform ethical decision-making, policy development, and technological design in the realm of autonomous AI systems. Ultimately, the findings of this research are expected to contribute to the ongoing discourse on AI ethics and moral responsibility, offering valuable insights for policymakers, industry professionals, researchers, and the general public. By fostering a deeper understanding of the ethical challenges posed by autonomous AI systems, this study seeks to promote responsible AI development and deployment practices that prioritize human well-being, social justice, and ethical values in the age of artificial intelligence.Blazingprojects Mobile App

📚 Over 50,000 Project Materials

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Software coding and Machine construction

🎓 Postgraduate/Undergraduate Research works

📥 Instant Whatsapp/Email Delivery

Related Research

Exploring the Ethics of Artificial Intelligence: Implications for Human Society...

The project topic, "Exploring the Ethics of Artificial Intelligence: Implications for Human Society," delves into the complex and evolving relationshi...

The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI System...

The project titled "The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI Systems" aims to delve into the intricate ethical co...

Exploring the Ethics of Artificial Intelligence in Decision-Making Processes...

The project titled "Exploring the Ethics of Artificial Intelligence in Decision-Making Processes" delves into the critical examination of the ethical ...

Exploring the Ethics of Artificial Intelligence: Implications for Society...

Overview: The rapid advancement of artificial intelligence (AI) technologies has brought forth numerous ethical considerations that have profound implications ...

Exploring the Ethics of Artificial Intelligence: Can Machines Have Moral Responsibil...

The research project titled "Exploring the Ethics of Artificial Intelligence: Can Machines Have Moral Responsibilities?" delves into the intricate int...

The Ethics of Artificial Intelligence: Examining the Moral Implications of AI Develo...

The project topic "The Ethics of Artificial Intelligence: Examining the Moral Implications of AI Development and Implementation" delves into the intri...

The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI Develo...

The project on "The Ethics of Artificial Intelligence: Exploring the Moral Implications of AI Development and Implementation" delves into the complex ...

The Ethics of Artificial Intelligence: Examining Moral Responsibility in Autonomous ...

The project topic, "The Ethics of Artificial Intelligence: Examining Moral Responsibility in Autonomous Systems," delves into the complex intersection...

The Moral Implications of Artificial Intelligence: Examining the Ethics of AI Decisi...

The project titled "The Moral Implications of Artificial Intelligence: Examining the Ethics of AI Decision-Making" delves into the complex intersectio...