Enhancing Cybersecurity through Explainable AI and Interpretability Techniques

Table Of Contents

<p><br>Table of Contents:<br><br>1. Introduction<br> 1.1 Background<br> 1.2 Significance of Explainable AI in Cybersecurity<br> 1.3 Challenges in Interpretable Cybersecurity AI<br> 1.4 Research Objectives<br> 1.5 Scope of the Study<br> 1.6 Organization of the Thesis<br><br>2. Literature Review<br> 2.1 Overview of AI in Cybersecurity<br> 2.2 Explainable AI Techniques and Interpretability in Cybersecurity<br> 2.3 Applications of Explainable AI in Threat Detection<br> 2.4 Interpretable Machine Learning Models for Cybersecurity<br> 2.5 Related Research on Explainable AI in Cybersecurity<br> 2.6 Evaluation Metrics for Interpretable Cybersecurity AI<br> 2.7 Challenges and Opportunities in Explainable AI for Cybersecurity<br><br>3. Methodology<br> 3.1 Data Collection and Preprocessing for Interpretable Cybersecurity AI<br> 3.2 Selection of Explainable AI Models and Algorithms<br> 3.3 Design and Implementation of Interpretable Threat Detection Techniques<br> 3.4 Performance Evaluation Metrics for Explainable AI in Cybersecurity<br> 3.5 Ethical Considerations in Interpretable AI Research<br> 3.6 Experimentation Setup for Interpretable Cybersecurity AI<br> 3.7 Validation and Verification of Interpretable AI Models<br><br>4. Implementation and Results<br> 4.1 Deployment of Explainable AI Models for Threat Detection<br> 4.2 Comparative Analysis of Interpretable Cybersecurity AI Techniques<br> 4.3 Visualization of Explainable AI Results<br> 4.4 Performance Evaluation and Accuracy of Interpretable AI Models<br> 4.5 Case Studies of Interpretable AI in Real-world Cybersecurity Applications<br> 4.6 User Acceptance and Usability of Explainable AI Systems<br> 4.7 Ethical Implications and Regulatory Compliance in Interpretable AI<br><br>5. Conclusion and Future Directions<br> 5.1 Summary of Research Findings<br> 5.2 Implications for Cybersecurity Advancements<br> 5.3 Limitations and Challenges of Explainable AI Models<br> 5.4 Future Research Directions in Interpretable Cybersecurity AI<br> 5.5 Ethical Implications and Regulatory Compliance<br> 5.6 Recommendations for Explainable AI Implementation<br> 5.7 Conclusion and Final Remarks<br></p>

Project Abstract

Abstract

The field of cybersecurity faces increasing challenges due to the evolving nature of cyber threats and the complexity of modern IT environments. This research explores the potential of explainable AI and interpretability techniques in bolstering cybersecurity measures. The study begins with a comprehensive review of AI in cybersecurity, focusing on the significance of explainable AI, challenges in interpretability, and the current landscape of research in this domain. The methodology encompasses data collection, preprocessing, the selection and implementation of explainable AI models and algorithms, and the design of interpretable threat detection techniques. Performance evaluation metrics, ethical considerations, and experimentation setup are integral components of the research methodology. The implementation phase involves the deployment of explainable AI models, comparative analysis of interpretable cybersecurity AI techniques, and visualization of results. The study concludes with a summary of research findings, implications for cybersecurity advancements, future research directions, ethical considerations, and regulatory compliance in interpretable AI. This research provides insights into the potential of explainable AI to enhance cybersecurity, with implications for threat detection, user acceptance, and real-world applications.

Project Overview

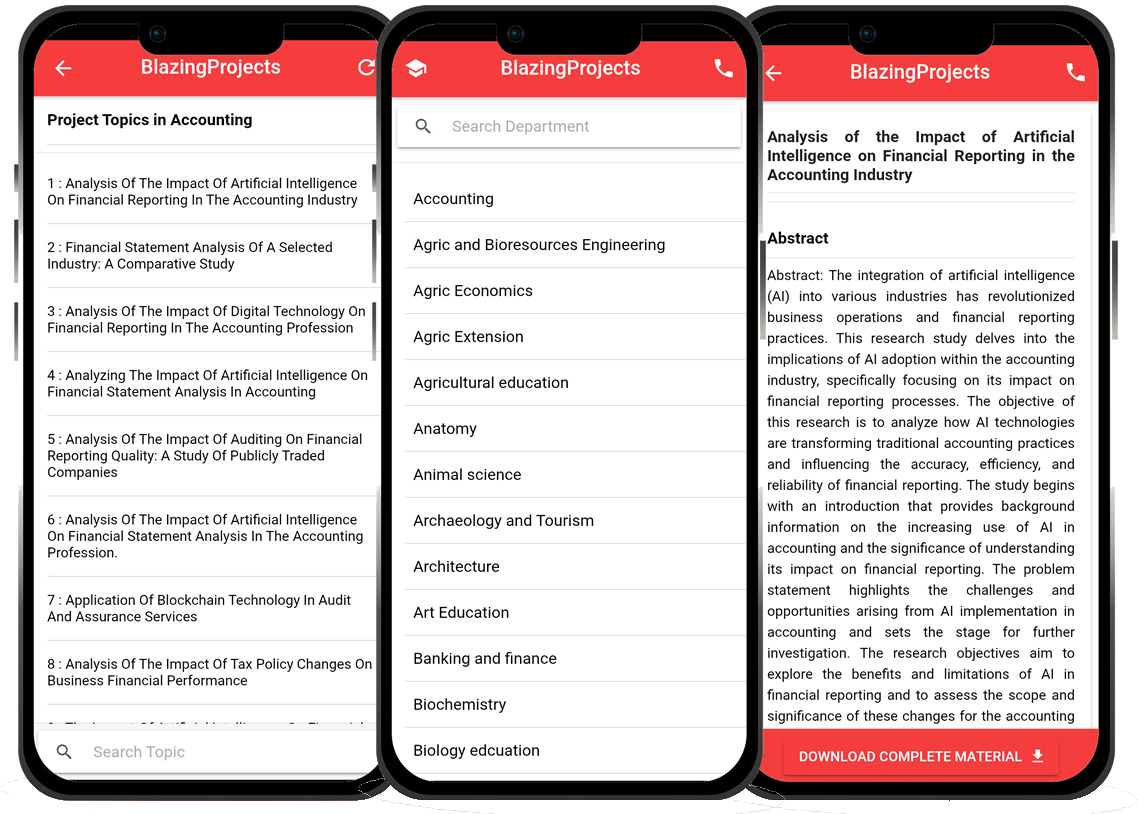

Blazingprojects Mobile App

📚 Over 50,000 Project Materials

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Software coding and Machine construction

🎓 Postgraduate/Undergraduate Research works

📥 Instant Whatsapp/Email Delivery

Related Research

Predicting Disease Outbreaks Using Machine Learning and Data Analysis...

The project topic, "Predicting Disease Outbreaks Using Machine Learning and Data Analysis," focuses on utilizing advanced computational techniques to ...

Implementation of a Real-Time Facial Recognition System using Deep Learning Techniqu...

The project on "Implementation of a Real-Time Facial Recognition System using Deep Learning Techniques" aims to develop a sophisticated system that ca...

Applying Machine Learning for Network Intrusion Detection...

The project topic "Applying Machine Learning for Network Intrusion Detection" focuses on utilizing machine learning algorithms to enhance the detectio...

Analyzing and Improving Machine Learning Model Performance Using Explainable AI Tech...

The project topic "Analyzing and Improving Machine Learning Model Performance Using Explainable AI Techniques" focuses on enhancing the effectiveness ...

Applying Machine Learning Algorithms for Predicting Stock Market Trends...

The project topic "Applying Machine Learning Algorithms for Predicting Stock Market Trends" revolves around the application of cutting-edge machine le...

Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems...

The project topic, "Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems," focuses on the integration of machine learn...

Anomaly Detection in Internet of Things (IoT) Networks using Machine Learning Algori...

Anomaly detection in Internet of Things (IoT) networks using machine learning algorithms is a critical research area that aims to enhance the security and effic...

Anomaly Detection in Network Traffic Using Machine Learning Algorithms...

Anomaly detection in network traffic using machine learning algorithms is a crucial aspect of cybersecurity that aims to identify unusual patterns or behaviors ...

Predictive maintenance using machine learning algorithms...

Predictive maintenance is a proactive maintenance strategy that aims to predict equipment failures before they occur, thereby reducing downtime and maintenance ...