Design and implementation of an online tours and travel management system

Table Of Contents

Project Abstract

No response received.Project Overview

- INTRODUCTION

1.1 INTRODUCTION TO PROJECT

1.2 ORGANIZATION PROFILE

1.3 PURPOSE OF THE SYSTEM

1.4 PROBLEMS IN EXISTING SYSTEM

1.5 SOLUTION OF THESE PROBLEMS

- SYSTEM ANALYSIS

2.1 INTRODUCTION

2.2 ANALYSIS MODEL

2.3 STUDY OF THE SYSTEM

2.4 SYSTEM REQUIREMENT SPECIFICATIONS

2.5 PROPOSED SYSTEM

2.6 INPUT AND OUTPUT

2.7 PROCESS MODULES USED WITH JUSTIFICATION

- FEASIBILITY REPORT

3.1 TECHNICAL FEASIBILITY

3.2 OPERATIONAL FEASIBILITY

3.3 ECONOMICAL FEASIBILTY

- SOFTWARE REQUIREMENT SPECIFICATIONS

4.1 FUNCTIONAL REQUIREMENTS

4.2 PERFORMANCE REQUIREMENTS

- SELECTED SOFTWARE

5.1 INTRODUCTION TO .NET FRAME WORK

5.2 ASP.NET

5.3 C#.NET

5.4 SQL SERVER

- SYSTEM DESIGN

6.1 INTRODUCTION

6.2 NORMALIZATION

6.3 E-R DIAGRAM

6.4 DATA FLOW DIAGRAMS

6.5 DATA DICTIONARY

6.6 UML DIAGRAMS

- OUTPUT SCREENS

- SYSTEM TESTING AND IMPLEMENTATION

8.1 INTRODUCTION

8.2 STRATEGIC APPROACH OF SOFTWARE TESTING

8.3 UNIT TESTING

- SYSTEM SECURITY

9.1 INTRODUCTION

9.2 SECURITY IN SOFTWARE

- CONCLUSION

- FUTURE ENHANCEMENTS

- BIBLIOGRAPHY

1.1 INTRODUCTION TO PROJECT

A part of the development process the members of the staff of the company are required to undertake trips to various parts of the globe. The visits may be for business or operational purpose. In this, the company is assisted by one of its departments – the Voyage.

1.2 ORGANIZATION PROFILE

Software Solutions is an IT solution provider for a dynamic environment where business and technology strategies converge. Their approach focuses on new ways of business combining IT innovation and adoption while also leveraging an organization’s current IT assets. Their work with large global corporations and new products or services and to implement prudent business and technology strategies in today’s environment.

THIS APPROACH RESTS ON:

- A strategy where we architect, integrate and manage technology services and solutions – we call it AIM for success.

- A robust offshore development methodology and reduced demand on customer resources.

- A focus on the use of reusable frameworks to provide cost and times benefits.

They combine the best people, processes and technology to achieve excellent results – consistency. We offer customers the advantages of:

SPEED:

They understand the importance of timing, of getting there before the competition. A rich portfolio of reusable, modular frameworks helps jump-start projects. Tried and tested methodology ensures that we follow a predictable, low – risk path to achieve results. Our track record is testimony to complex projects delivered within and evens before schedule.

EXPERTISE:

Our teams combine cutting edge technology skills with rich domain expertise. What’s equally important – they share a strong customer orientation that means they actually start by listening to the customer. They’re focused on coming up with solutions that serve customer requirements today and anticipate future needs.

A FULL SERVICE PORTFOLIO:

They offer customers the advantage of being able to Architect, integrate and manage technology services. This means that they can rely on one, fully accountable source instead of trying to integrate disparate multi vendor solutions.

SERVICES:

Xxx is providing its services to companies which are in the field of production, quality control etc with their rich expertise and experience and information technology they are in best position to provide software solutions to distinct business requirements.

1.3 PURPOSE OF THE PROJECT

As a part of the development process the members of the staff of the company are required to undertake trips to various parts of the globe. The visits may be for business or operational purpose. In this, the company is assisted by one of its departments – the Voyage. The Voyage assist the company in the following areas:

- Passport applications

- Visa/Work-permit applications

- Visa/Work-permit related information

- Travel and accommodation in foreign countries.

- Correspondence and liaison with foreign embassies/High commissions.

- Administration of the travel policy of the company.

1.4 PROBLEM IN EXISTING SYSTEM

The Voyage is required to maintain considerable information on the employees, their passports, the Visa/Work-permit related information, travel information, extensions etc. Presently these are maintained in Microsoft Excel form, in a standalone mode. There is a need to automate this function and merge it with the existing HR system of the company.

SOLUTION OF THESE PROBLEMS

The development of this new system contains the following activities, which try to automate the entire process keeping in the view of database integration approach.

- User Friendliness is provided in the application with various controls provided by system Rich User Interface.

- The system makes the overall project management much easier and flexible.

- It can be accessed over the Intranet.

- The employee information can be stored in centralized database which can be maintained by the system.

- This can give the good security for user information because data is not in client machine.

- Authentication is provided for this application only registered Users can access.

- .

- The automated system will provide to the employees for reliable services.

- The speed and accuracy of this system will improve more and more.

SYSTEM ANALYSIS

2.1 INTRODUCTION

After analyzing the requirements of the task to be performed, the next step is to analyze the problem and understand its context. The first activity in the phase is studying the existing system and other is to understand the requirements and domain of the new system. Both the activities are equally important, but the first activity serves as a basis of giving the functional specifications and then successful design of the proposed system. Understanding the properties and requirements of a new system is more difficult and requires creative thinking and understanding of existing running system is also difficult, improper understanding of present system can lead diversion from solution.

2.2 ANALYSIS MODEL

SDLC METHODOLOGIES

This document play a vital role in the development of life cycle (SDLC) as it describes the complete requirement of the system. It means for use by developers and will be the basic during testing phase. Any changes made to the requirements in the future will have to go through formal change approval process.

SPIRAL MODEL was defined by Barry Boehm in his 1988 article, “A spiral Model of Software Development and Enhancement. This model was not the first model to discuss iterative development, but it was the first model to explain why the iteration models.

As originally envisioned, the iterations were typically 6 months to 2 years long. Each phase starts with a design goal and ends with a client reviewing the progress thus far. Analysis and engineering efforts are applied at each phase of the project, with an eye toward the end goal of the project.

The steps for Spiral Model can be generalized as follows:

- The new system requirements are defined in as much details as possible. This usually involves interviewing a number of usersrepresenting all the external or internal users and other aspects of the existing system.

- A preliminary design is created for the new system.

- A first prototype of the new system is constructed from the preliminary design. This is usually a scaled-down system, and represents an approximation of the characteristics of the final product.

- A second prototype is evolved by a fourfold procedure:

- Evaluating the first prototype in terms of its strengths, weakness, and risks.

- Defining the requirements of the second prototype.

- Planning an designing the second prototype.

- Constructing and testing the second prototype.

- At the customer option, the entire project can be aborted if the risk is deemed too great. Risk factors might involved development cost overruns, operating-cost miscalculation, or any other factor that could, in the customer’s judgment, result in a less-than-satisfactory final product.

- The existing prototype is evaluated in the same manner as was the previous prototype, and if necessary, another prototype is developed from it according to the fourfold procedure outlined above.

- The preceding steps are iterated until the customer is satisfied that the refined prototype represents the final product desired.

- The final system is constructed, based on the refined prototype.

- The final system is thoroughly evaluated and tested. Routine maintenance is carried on a continuing basis to prevent large scale failures and to minimize down time.

The following diagram shows how a spiral model acts like:

Fig 1.0-Spiral Model

2.3 STUDY OF THE SYSTEM

In the flexibility of the uses the interface has been developed a graphics concept in mind, associated through a browser interface. The GUI’S at the top level have been categorized as

- Administrative user interface

- The operational or generic user interface

The administrative user interface concentrates on the consistent information that is practically, part of the organizational activities and which needs proper authentication for the data collection. The interfaces help the administrations with all the transactional states like Data insertion, Data deletion and Data updating along with the extensive data search capabilities.

The operational or generic user interface helps the users upon the system in transactions through the existing data and required services. The operational user interface also helps the ordinary users in managing their own information helps the ordinary users in managing their own information in a customized manner as per the assisted flexibilities

NUMBER OF MODULES

The system after careful analysis has been identified to be presented with the following modules:

The Modules involved are

- Administrator

- Company Employees

- Visa Processing

- Country Information

- Travelling

- Reports

- Authentication

Administration

Administration is the chief of the Voyage Management. He can have all the privileges to do anything in this system. Administrator can register new employees, departments into the system. Admin can keep track team employees and their performance. He can find the vacancy position other countries called as outsourcing. Administrator is only having the authority to select employees to send for outsourcing. He can provide necessary arrangements to the employees who are going for outsourcing.

Company Employees

Employees are working for the company in this country or outsource to other country. Whenever an employee wants to go for outsourcing he needs to provide his complete documents like education qualifications, passport, identification details etc. After completion of visa processing he can move from here to destination country. Before going to outsourcing he knows the details of his work location, work environment, technologies etc… If he doesn’t have any passport, he can provide necessary documents for applying the passport.

Visa Processing

The major issue starts here, because the company must provide a visa to the employees which are going for outsourcing. The visa processing is different dependents upon various countries. For every visa processing the company must provide employee complete information like passport details, work location, work permission, no of days, work type, salary details, experience of the employee etc. whenever the visa processing is complete then only the employee is ready to went for other countries.

Country Information

Before going to outsourcing an employee must know the details of the country which want to work there. This system provides maximum information to the employees which country they need to go. The major information goes to food habits, hotel details, vehicles transportation, office environment, office timings, currency details, etc.

Travelling

While going to the other countries the system provides the complete information of mode of travelling also. The major part goes to flight information, which country he is going, is there any direct flight is there, ticket conformed or not, executive or economy class, and seat details. After that the system provide vehicle from airport to his accommodation hotel room also.

2.4 System Requirement Specifications

Hardware Requirements:

- PIV 2.8 GHz Processor and Above

- RAM 512MB and Above

- HDD 40 GB Hard Disk Space and Above

Software Requirements:

- WINDOWS OS (XP / 2000 / 200 Server / 2003 Server)

- Visual Studio .Net 2008 Enterprise Edition

- Internet Information Server 5.0 (IIS)

- Visual Studio .Net Framework (Minimal for Deployment) version 3.5

- SQL Server 2005 Enterprise Edition

2.5 PROPOSED SYSTEM

To debug the existing system, remove procedures those cause data redundancy, make navigational sequence proper. To provide information about users on different level and also to reflect the current work status depending on organization. To build strong password mechanism.

NEED FOR COMPUTERIZATION

We all know the importance of computerization. The world is moving ahead at lightning speed and everyone is running short of time. One always wants to get the information and perform a task he/she/they desire(s) within a short period of time and too with amount of efficiency and accuracy. The application areas for the computerization have been selected on the basis of following factors:

- Minimizing the manual records kept at different locations.

- There will be more data integrity.

- Facilitating desired information display, very quickly, by retrieving information from users.

- Facilitating various statistical information which helps in decision-making?

- To reduce manual efforts in activities that involved repetitive work.

Updating and deletion of such a huge amount of data will become easier.

FUNCTIONAL FEATURES OF THE MODEL

As far as the project is developed the functionality is simple, the objective of the proposal is to strengthen the functioning of Audit Status Monitoring and make them effective and better. The entire scope has been classified into five streams knows as Coordinator Level, management Level, Auditor Level, User Level and State Web Coordinator Level. The proposed software will cover the information needs with respect to each request of the user group viz. accepting the request, providing vulnerability document report and the current status of the audit.

2.6 INPUT AND OUTPUT

The major inputs and outputs and major functions of the system are follows:

Inputs:

- Admin enter his user id and password for login.

- User enters his user id and password for login.

- New users give his completed personnel, address and phone details for registration.

- Admin gives different kind of user information for search the user data.

- User gives his user id, hint question, answer for getting the forgotten password.

- Employee search for flight booking status

- Administrator search for visa processing status.

Outputs:

- Admin can have his own home page.

- Users enter their own home page.

- The user defined data can store in the centralized database.

- Admin will get the login information of a particular user.

- The new user’s data will be stored in the centralized database.

- Admin get the search details of different criteria.

- User can get his forgot password.

- Travelling details can be displayed to the employees

- Administrator got visa processing completion documents.

2.7 PROCESS MODEL USED WITH JUSTIFICATION

ACCESS CONTROL FOR DATA WHICH REQUIRE USER AUTHENTICAION

The following commands specify access control identifiers and they are typically used to authorize and authenticate the user (command codes are shown in parentheses)

USER NAME (USER)

The user identification is that which is required by the server for access to its file system. This command will normally be the first command transmitted by the user after the control connections are made (some servers may require this).

PASSWORD (PASS)

This command must be immediately preceded by the user name command, and, for some sites, completes the user’s identification for access control. Since password information is quite sensitive, it is desirable in general to “mask” it or suppress type out.

Feasibility Report

Preliminary investigation examine project feasibility, the likelihood the system will be useful to the organization. The main objective of the feasibility study is to test the Technical, Operational and Economical feasibility for adding new modules and debugging old running system. All system is feasible if they are unlimited resources and infinite time. There are aspects in the feasibility study portion of the preliminary investigation:

- Technical Feasibility

- Operational Feasibility

- Economical Feasibility

3.1. TECHNICAL FEASIBILITY

The technical issue usually raised during the feasibility stage of the investigation includes the following:

- Does the necessary technology exist to do what is suggested?

- Do the proposed equipments have the technical capacity to hold the data required to use the new system?

- Will the proposed system provide adequate response to inquiries, regardless of the number or location of users?

- Can the system be upgraded if developed?

- Are there technical guarantees of accuracy, reliability, ease of access and data security?

Earlier no system existed to cater to the needs of ‘Secure Infrastructure Implementation System’. The current system developed is technically feasible. It is a web based user interface for audit workflow at NIC-CSD. Thus it provides an easy access to the users. The database’s purpose is to create, establish and maintain a workflow among various entities in order to facilitate all concerned users in their various capacities or roles. Permission to the users would be granted based on the roles specified. Therefore, it provides the technical guarantee of accuracy, reliability and security. The software and hard requirements for the development of this project are not many and are already available in-house at NIC or are available as free as open source. The work for the project is done with the current equipment and existing software technology. Necessary bandwidth exists for providing a fast feedback to the users irrespective of the number of users using the system.

3.2. OPERATIONAL FEASIBILITY

Proposed projects are beneficial only if they can be turned out into information system. That will meet the organization’s operating requirements. Operational feasibility aspects of the project are to be taken as an important part of the project implementation. Some of the important issues raised are to test the operational feasibility of a project includes the following: –

- Is there sufficient support for the management from the users?

- Will the system be used and work properly if it is being developed and implemented?

- Will there be any resistance from the user that will undermine the possible application benefits?

This system is targeted to be in accordance with the above-mentioned issues. Beforehand, the management issues and user requirements have been taken into consideration. So there is no question of resistance from the users that can undermine the possible application benefits.

The well-planned design would ensure the optimal utilization of the computer resources and would help in the improvement of performance status.

3.3. ECONOMICAL FEASIBILITY

A system can be developed technically and that will be used if installed must still be a good investment for the organization. In the economical feasibility, the development cost in creating the system is evaluated against the ultimate benefit derived from the new systems. Financial benefits must equal or exceed the costs.

The system is economically feasible. It does not require any addition hardware or software. Since the interface for this system is developed using the existing resources and technologies available at NIC, There is nominal expenditure and economical feasibility for certain.

SOFTWARE REQUIREMENT SPECIFICATION

The software, Site Explorer is designed for management of web sites from a remote location.

INTRODUCTION

Purpose: The main purpose for preparing this document is to give a general insight into the analysis and requirements of the existing system or situation and for determining the operating characteristics of the system.

Scope: This Document plays a vital role in the development life cycle (SDLC) and it describes the complete requirement of the system. It is meant for use by the developers and will be the basic during testing phase. Any changes made to the requirements in the future will have to go through formal change approval process.

DEVELOPERS RESPONSIBILITIES OVERVIEW:

The developer is responsible for:

- Developing the system, which meets the SRS and solving all the requirements of the system?

- Demonstrating the system and installing the system at client’s location after the acceptance testing is successful.

- Submitting the required user manual describing the system interfaces to work on it and also the documents of the system.

- Conducting any user training that might be needed for using the system.

- Maintaining the system for a period of one year after installation.

4.1. FUNCTIONAL REQUIREMENTS

OUTPUT DESIGN

Outputs from computer systems are required primarily to communicate the results of processing to users. They are also used to provides a permanent copy of the results for later consultation. The various types of outputs in general are:

- External Outputs, whose destination is outside the organization.

- Internal Outputs whose destination is within organization and they are the

- User’s main interface with the computer.

- Operational outputs whose use is purely within the computer department.

- Interface outputs, which involve the user in communicating directly.

OUTPUT DEFINITION

- Type of the output

- Content of the output

- Format of the output

- Location of the output

- Frequency of the output

- Volume of the output

- Sequence of the output

It is not always desirable to print or display data as it is held on a computer. It should be decided as which form of the output is the most suitable.

For Example

- Will decimal points need to be inserted

- Should leading zeros be suppressed.

Output Media:

In the next stage it is to be decided that which medium is the most appropriate for the output. The main considerations when deciding about the output media are:

- The suitability for the device to the particular application.

- The need for a hard copy.

- The response time required.

- The location of the users

- The software and hardware available.

Keeping in view the above description the project is to have outputs mainly coming under the category of internal outputs. The main outputs desired according to the requirement specification are:

The outputs were needed to be generated as a hot copy and as well as queries to be viewed on the screen. Keeping in view these outputs, the format for the output is taken from the outputs, which are currently being obtained after manual processing. The standard printer is to be used as output media for hard copies.

INPUT DESIGN

Input design is a part of overall system design. The main objective during the input design is as given below:

- To produce a cost-effective method of input.

- To achieve the highest possible level of accuracy.

- To ensure that the input is acceptable and understood by the user.

INPUT STAGES:

The main input stages can be listed as below:

- Data recording

- Data transcription

- Data conversion

- Data verification

- Data control

- Data transmission

- Data validation

- Data correction

INPUT TYPES:

It is necessary to determine the various types of inputs. Inputs can be categorized as follows:

- External inputs, which are prime inputs for the system.

- Internal inputs, which are user communications with the system.

- Operational, which are computer department’s communications to the system?

- Interactive, which are inputs entered during a dialogue.

INPUT MEDIA:

At this stage choice has to be made about the input media. To conclude about the input media consideration has to be given to;

- Type of input

- Flexibility of format

- Speed

- Accuracy

- Verification methods

- Rejection rates

- Ease of correction

- Storage and handling requirements

- Security

- Easy to use

- Portability

Keeping in view the above description of the input types and input media, it can be said that most of the inputs are of the form of internal and interactive. As

Input data is to be the directly keyed in by the user, the keyboard can be considered to be the most suitable input device.

ERROR AVOIDANCE

At this stage care is to be taken to ensure that input data remains accurate form the stage at which it is recorded up to the stage in which the data is accepted by the system. This can be achieved only by means of careful control each time the data is handled.

ERROR DETECTION

Even though every effort is make to avoid the occurrence of errors, still a small proportion of errors is always likely to occur, these types of errors can be discovered by using validations to check the input data.

DATA VALIDATION

Procedures are designed to detect errors in data at a lower level of detail. Data validations have been included in the system in almost every area where there is a possibility for the user to commit errors. The system will not accept invalid data. Whenever an invalid data is keyed in, the system immediately prompts the user and the user has to again key in the data and the system will accept the data only if the data is correct. Validations have been included where necessary.

The system is designed to be a user friendly one. In other words the system has been designed to communicate effectively with the user. The system has been designed with popup menus.

USER INTERFACE DESIGN

It is essential to consult the system users and discuss their needs while designing the user interface:

USER INTERFACE SYSTEMS CAN BE BROADLY CLASIFIED AS:

- User initiated interface the user is in charge, controlling the progress of the user/computer dialogue. In the computer-initiated interface, the computer selects the next stage in the interaction.

- Computer initiated interfaces

In the computer initiated interfaces the computer guides the progress of the user/computer dialogue. Information is displayed and the user response of the computer takes action or displays further information.

USER_INITIATED INTERGFACES

User initiated interfaces fall into tow approximate classes:

- Command driven interfaces: In this type of interface the user inputs commands or queries which are interpreted by the computer.

- Forms oriented interface: The user calls up an image of the form to his/her screen and fills in the form. The forms oriented interface is chosen because it is the best choice.

COMPUTER-INITIATED INTERFACES

The following computer – initiated interfaces were used:

- The menu system for the user is presented with a list of alternatives and the user chooses one; of alternatives.

- Questions – answer type dialog system where the computer asks question and takes action based on the basis of the users reply.

Right from the start the system is going to be menu driven, the opening menu displays the available options. Choosing one option gives another popup menu with more options. In this way every option leads the users to data entry form where the user can key in the data.

ERROR MESSAGE DESIGN:

The design of error messages is an important part of the user interface design. As user is bound to commit some errors or other while designing a system the system should be designed to be helpful by providing the user with information regarding the error he/she has committed.

This application must be able to produce output at different modules for different inputs.

4.2. PERFORMANCE REQUIREMENTS

Performance is measured in terms of the output provided by the application.

Requirement specification plays an important part in the analysis of a system. Only when the requirement specifications are properly given, it is possible to design a system, which will fit into required environment. It rests largely in the part of the users of the existing system to give the requirement specifications because they are the people who finally use the system. This is because the requirements have to be known during the initial stages so that the system can be designed according to those requirements. It is very difficult to change the system once it has been designed and on the other hand designing a system, which does not cater to the requirements of the user, is of no use.

The requirement specification for any system can be broadly stated as given below:

- The system should be able to interface with the existing system

- The system should be accurate

- The system should be better than the existing system

The existing system is completely dependent on the user to perform all the duties.

SYSTEM DESIGN

6.1. INTRODUCTION

Software design sits at the technical kernel of the software engineering process and is applied regardless of the development paradigm and area of application. Design is the first step in the development phase for any engineered product or system. The designer’s goal is to produce a model or representation of an entity that will later be built. Beginning, once system requirement have been specified and analyzed, system design is the first of the three technical activities -design, code and test that is required to build and verify software.

The importance can be stated with a single word “Quality”. Design is the place where quality is fostered in software development. Design provides us with representations of software that can assess for quality. Design is the only way that we can accurately translate a customer’s view into a finished software product or system. Software design serves as a foundation for all the software engineering steps that follow. Without a strong design we risk building an unstable system – one that will be difficult to test, one whose quality cannot be assessed until the last stage.

During design, progressive refinement of data structure, program structure, and procedural details are developed reviewed and documented. System design can be viewed from either technical or project management perspective. From the technical point of view, design is comprised of four activities – architectural design, data structure design, interface design and procedural design.

6.2 NORMALIZATION

It is a process of converting a relation to a standard form. The process is used to handle the problems that can arise due to data redundancy i.e. repetition of data in the database, maintain data integrity as well as handling problems that can arise due to insertion, updating, deletion anomalies.

Decomposing is the process of splitting relations into multiple relations to eliminate anomalies and maintain anomalies and maintain data integrity. To do this we use normal forms or rules for structuring relation.

Insertion anomaly: Inability to add data to the database due to absence of other data.

Deletion anomaly: Unintended loss of data due to deletion of other data.

Update anomaly: Data inconsistency resulting from data redundancy and partial update

Normal Forms: These are the rules for structuring relations that eliminate anomalies.

FIRST NORMAL FORM:

A relation is said to be in first normal form if the values in the relation are atomic for every attribute in the relation. By this we mean simply that no attribute value can be a set of values or, as it is sometimes expressed, a repeating group.

SECOND NORMAL FORM:

A relation is said to be in second Normal form is it is in first normal form and it should satisfy any one of the following rules.

1) Primary key is a not a composite primary key

2) No non key attributes are present

3) Every non key attribute is fully functionally dependent on full set of primary key.

THIRD NORMAL FORM:

A relation is said to be in third normal form if their exits no transitive dependencies.

Transitive Dependency: If two non key attributes depend on each other as well as on the primary key then they are said to be transitively dependent.

The above normalization principles were applied to decompose the data in multiple tables thereby making the data to be maintained in a consistent state.

D

6.3 E-R Diagrams

- The relation upon the system is structure through a conceptual ER-Diagram, which not only specifics the existential entities but also the standard relations through which the system exists and the cardinalities that are necessary for the system state to continue.

- The entity Relationship Diagram (ERD) depicts the relationship between the data objects. The ERD is the notation that is used to conduct the date modeling activity the attributes of each data object noted is the ERD can be described resign a data object descriptions.

- The set of primary components that are identified by the ERD are

- Data object

- Relationships

- Attributes

- Various types of indicators.

The primary purpose of the ERD is to represent data objects and their relationships.

E-R Diagram:

6.5 DATA FLOW DIAGRAMS

A data flow diagram is graphical tool used to describe and analyze movement of data through a system. These are the central tool and the basis from which the other components are developed. The transformation of data from input to output, through processed, may be described logically and independently of physical components associated with the system. These are known as the logical data flow diagrams. The physical data flow diagrams show the actual implements and movement of data between people, departments and workstations. A full description of a system actually consists of a set of data flow diagrams. Using two familiar notations Yourdon, Gane and Sarson notation develops the data flow diagrams. Each component in a DFD is labeled with a descriptive name. Process is further identified with a number that will be used for identification purpose. The development of DFD’S is done in several levels. Each process in lower level diagrams can be broken down into a more detailed DFD in the next level. The lop-level diagram is often called context diagram. It consists a single process bit, which plays vital role in studying the current system. The process in the context level diagram is exploded into other process at the first level DFD.

The idea behind the explosion of a process into more process is that understanding at one level of detail is exploded into greater detail at the next level. This is done until further explosion is necessary and an adequate amount of detail is described for analyst to understand the process.

Larry Constantine first developed the DFD as a way of expressing system requirements in a graphical from, this lead to the modular design.

A DFD is also known as a “bubble Chart” has the purpose of clarifying system requirements and identifying major transformations that will become programs in system design. So it is the starting point of the design to the lowest level of detail. A DFD consists of a series of bubbles joined by data flows in the system.

DFD SYMBOLS:

In the DFD, there are four symbols

- A square defines a source(originator) or destination of system data

- An arrow identifies data flow. It is the pipeline through which the information flows

- A circle or a bubble represents a process that transforms incoming data flow into outgoing data flows.

- An open rectangle is a data store, data at rest or a temporary repository of data

CONSTRUCTING A DFD:

Several rules of thumb are used in drawing DFD’S:

- Process should be named and numbered for an easy reference. Each name should be representative of the process.

- The direction of flow is from top to bottom and from left to right. Data traditionally flow from source to the destination although they may flow back to the source. One way to indicate this is to draw long flow line back to a source. An alternative way is to repeat the source symbol as a destination. Since it is used more than once in the DFD it is marked with a short diagonal.

- When a process is exploded into lower level details, they are numbered.

- The names of data stores and destinations are written in capital letters. Process and dataflow names have the first letter of each work capitalized.

A DFD typically shows the minimum contents of data store. Each data store should contain all the data elements that flow in and out.

Questionnaires should contain all the data elements that flow in and out. Missing interfaces redundancies and like is then accounted for often through interviews.

SAILENT FEATURES OF DFD’S

- The DFD shows flow of data, not of control loops and decision are controlled considerations do not appear on a DFD.

- The DFD does not indicate the time factor involved in any process whether the dataflow take place daily, weekly, monthly or yearly.

- The sequence of events is not brought out on the DFD.

TYPES OF DATA FLOW DIAGRAMS

- Current Physical

- Current Logical

- New Logical

- New Physical

CURRENT PHYSICAL:

In Current Physical DFD process label include the name of people or their positions or the names of computer systems that might provide some of the overall system-processing label includes an identification of the technology used to process the data. Similarly data flows and data stores are often labels with the names of the actual physical media on which data are stored such as file folders, computer files, business forms or computer tapes.

CURRENT LOGICAL:

The physical aspects at the system are removed as much as possible so that the current system is reduced to its essence to the data and the processors that transforms them regardless of actual physical form.

NEW LOGICAL:

This is exactly like a current logical model if the user were completely happy with the user were completely happy with the functionality of the current system but had problems with how it was implemented typically through the new logical model will differ from current logical model while having additional functions, absolute function removal and inefficient flows recognized.

NEW PHYSICAL:

The new physical represents only the physical implementation of the new system.

RULES GOVERNING THE DFD’S

PROCESS

1) No process can have only outputs.

2) No process can have only inputs. If an object has only inputs than it must be a sink.

3) A process has a verb phrase label.

DATA STORE

1) Data cannot move directly from one data store to another data store, a process must move data.

2) Data cannot move directly from an outside source to a data store, a process, which receives, must move data from the source and place the data into data store

3) A data store has a noun phrase label.

SOURCE OR SINK

The origin and /or destination of data.

1) Data cannot move direly from a source to sink it must be moved by a process

2) A source and /or sink has a noun phrase land

DATA FLOW

1) A Data Flow has only one direction of flow between symbols. It may flow in both directions between a process and a data store to show a read before an update. The later is usually indicated however by two separate arrows since these happen at different type.

2) A join in DFD means that exactly the same data comes from any of two or more different processes data store or sink to a common location.

3) A data flow cannot go directly back to the same process it leads. There must be at least one other process that handles the data flow produce some other data flow returns the original data into the beginning process.

4) A Data flow to a data store means update (delete or change).

5) A data Flow from a data store means retrieve or use.

A data flow has a noun phrase label more than one data flow noun phrase can appear on a single arrow as long as all of the flows on the same arrow move together as one package.

SYSTEM TESTING AND IMPLEMENTATION

1.1 INTRODUCTION

Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. In fact, testing is the one step in the software engineering process that could be viewed as destructive rather than constructive.

A strategy for software testing integrates software test case design methods into a well-planned series of steps that result in the successful construction of software. Testing is the set of activities that can be planned in advance and conducted systematically. The underlying motivation of program testing is to affirm software quality with methods that can economically and effectively apply to both strategic to both large and small-scale systems.

8.2. STRATEGIC APPROACH TO SOFTWARE TESTING

The software engineering process can be viewed as a spiral. Initially system engineering defines the role of software and leads to software requirement analysis where the information domain, functions, behavior, performance, constraints and validation criteria for software are established. Moving inward along the spiral, we come to design and finally to coding. To develop computer software we spiral in along streamlines that decrease the level of abstraction on each turn.

A strategy for software testing may also be viewed in the context of the spiral. Unit testing begins at the vertex of the spiral and concentrates on each unit of the software as implemented in source code. Testing progress by moving outward along the spiral to integration testing, where the focus is on the design and the construction of the software architecture. Talking another turn on outward on the spiral we encounter validation testing where requirements established as part of software requirements analysis are validated against the software that has been constructed. Finally we arrive at system testing, where the software and other system elements are tested as a whole.

8.3. UNIT TESTING

Unit testing focuses verification effort on the smallest unit of software design, the module. The unit testing we have is white box oriented and some modules the steps are conducted in parallel.

- WHITE BOX TESTING

This type of testing ensures that

- All independent paths have been exercised at least once

- All logical decisions have been exercised on their true and false sides

- All loops are executed at their boundaries and within their operational bounds

- All internal data structures have been exercised to assure their validity.

To follow the concept of white box testing we have tested each form .we have created independently to verify that Data flow is correct, All conditions are exercised to check their validity, All loops are executed on their boundaries.

- BASIC PATH TESTING

Established technique of flow graph with Cyclomatic complexity was used to derive test cases for all the functions. The main steps in deriving test cases were:

Use the design of the code and draw correspondent flow graph.

Determine the Cyclomatic complexity of resultant flow graph, using formula:

V(G)=E-N+2 or

V(G)=P+1 or

V(G)=Number Of Regions

Where V(G) is Cyclomatic complexity,

E is the number of edges,

N is the number of flow graph nodes,

P is the number of predicate nodes.

Determine the basis of set of linearly independent paths.

- CONDITIONAL TESTING

In this part of the testing each of the conditions were tested to both true and false aspects. And all the resulting paths were tested. So that each path that may be generate on particular condition is traced to uncover any possible errors.

- DATA FLOW TESTING

This type of testing selects the path of the program according to the location of definition and use of variables. This kind of testing was used only when some local variable were declared. The definition-use chain method was used in this type of testing. These were particularly useful in nested statements.

- LOOP TESTING

In this type of testing all the loops are tested to all the limits possible. The following exercise was adopted for all loops:

All the loops were tested at their limits, just above them and just below them.

All the loops were skipped at least once.

For nested loops test the inner most loop first and then work outwards.

For concatenated loops the values of dependent loops were set with the help of connected loop.

Unstructured loops were resolved into nested loops or concatenated loops and tested as above.

Each unit has been separately tested by the development team itself and all the input have been validated.

SYSTEM SECURITY

9.1 INTRODUCTION

The protection of computer based resources that includes hardware, software, data, procedures and people against unauthorized use or natural

Disaster is known as System Security.

System Security can be divided into four related issues:

- Security

- Integrity

- Privacy

- Confidentiality

SYSTEM SECURITY refers to the technical innovations and procedures applied to the hardware and operation systems to protect against deliberate or accidental damage from a defined threat.

DATA SECURITY is the protection of data from loss, disclosure, modification and destruction.

SYSTEM INTEGRITY refers to the power functioning of hardware and programs, appropriate physical security and safety against external threats such as eavesdropping and wiretapping.

PRIVACY defines the rights of the user or organizations to determine what information they are willing to share with or accept from others and how the organization can be protected against unwelcome, unfair or excessive dissemination of information about it.

CONFIDENTIALITY is a special status given to sensitive information in a database to minimize the possible invasion of privacy. It is an attribute of information that characterizes its need for protection.

9.3 SECURITY SOFTWARE

System security refers to various validations on data in form of checks and controls to avoid the system from failing. It is always important to ensure that only valid data is entered and only valid operations are performed on the system. The system employees two types of checks and controls:

CLIENT SIDE VALIDATION

Various client side validations are used to ensure on the client side that only valid data is entered. Client side validation saves server time and load to handle invalid data. Some checks imposed are:

- JavaScript in used to ensure those required fields are filled with suitable data only. Maximum lengths of the fields of the forms are appropriately defined.

- Forms cannot be submitted without filling up the mandatory data so that manual mistakes of submitting empty fields that are mandatory can be sorted out at the client side to save the server time and load.

- Tab-indexes are set according to the need and taking into account the ease of user while working with the system.

SERVER SIDE VALIDATION

Some checks cannot be applied at client side. Server side checks are necessary to save the system from failing and intimating the user that some invalid operation has been performed or the performed operation is restricted. Some of the server side checks imposed is:

- Server side constraint has been imposed to check for the validity of primary key and foreign key. A primary key value cannot be duplicated. Any attempt to duplicate the primary value results into a message intimating the user about those values through the forms using foreign key can be updated only of the existing foreign key values.

- User is intimating through appropriate messages about the successful operations or exceptions occurring at server side.

- Various Access Control Mechanisms have been built so that one user may not agitate upon another. Access permissions to various types of users are controlled according to the organizational structure. Only permitted users can log on to the system and can have access according to their category. User- name, passwords and permissions are controlled o the server side.

- Using server side validation, constraints on several restricted operations are imposed.

CONCLUSION

It has been a great pleasure for me to work on this exciting and challenging project. This project proved good for me as it provided practical knowledge of not only programming in ASP.NET and C#.NET web based application and no some extent Windows Application and SQL Server, but also about all handling procedure related with “Voyage Management”. It also provides knowledge about the latest technology used in developing web enabled application and client server technology that will be great demand in future. This will provide better opportunities and guidance in future in developing projects independently.

BENEFITS:

The project is identified by the merits of the system offered to the user. The merits of this project are as follows: –

- It’s a web-enabled project.

- This project offers user to enter the data through simple and interactive forms. This is very helpful for the client to enter the desired information through so much simplicity.

- The user is mainly more concerned about the validity of the data, whatever he is entering. There are checks on every stages of any new creation, data entry or updation so that the user cannot enter the invalid data, which can create problems at late

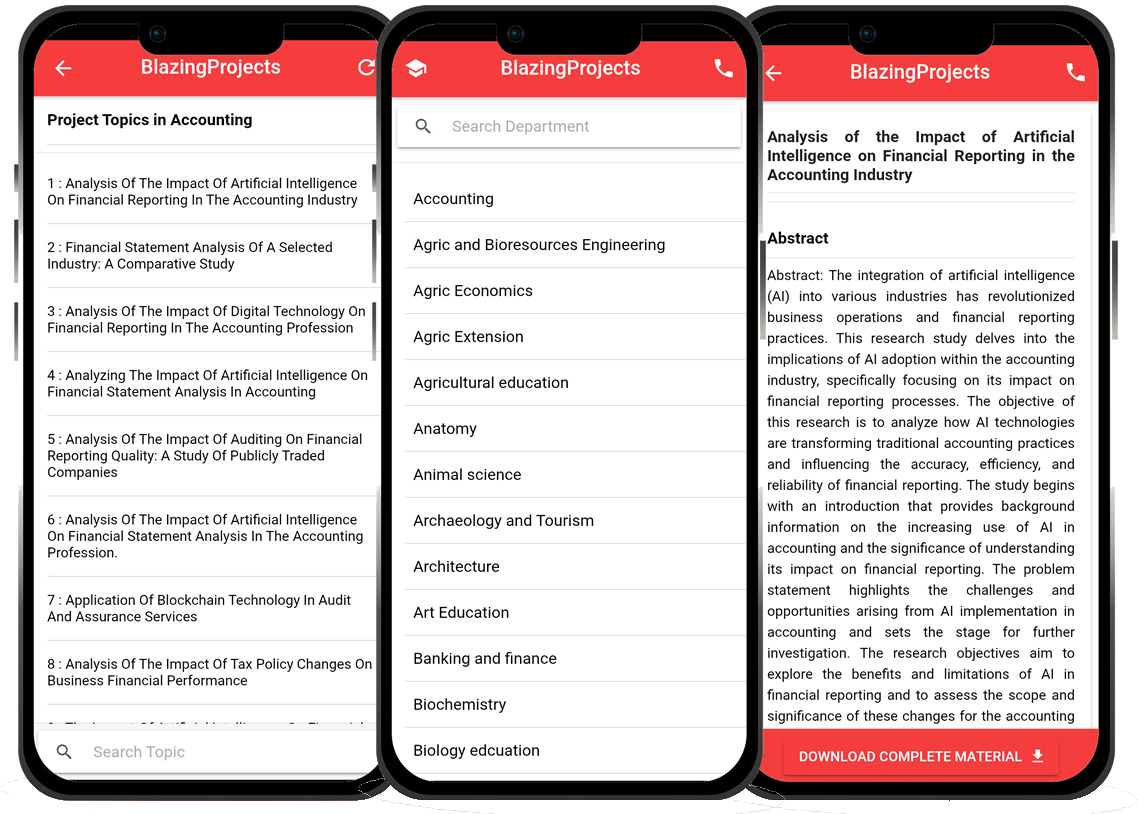

Blazingprojects Mobile App

📚 Over 50,000 Project Materials

📱 100% Offline: No internet needed

📝 Over 98 Departments

🔍 Software coding and Machine construction

🎓 Postgraduate/Undergraduate Research works

📥 Instant Whatsapp/Email Delivery

Related Research

Predicting Disease Outbreaks Using Machine Learning and Data Analysis...

The project topic, "Predicting Disease Outbreaks Using Machine Learning and Data Analysis," focuses on utilizing advanced computational techniques to ...

Implementation of a Real-Time Facial Recognition System using Deep Learning Techniqu...

The project on "Implementation of a Real-Time Facial Recognition System using Deep Learning Techniques" aims to develop a sophisticated system that ca...

Applying Machine Learning for Network Intrusion Detection...

The project topic "Applying Machine Learning for Network Intrusion Detection" focuses on utilizing machine learning algorithms to enhance the detectio...

Analyzing and Improving Machine Learning Model Performance Using Explainable AI Tech...

The project topic "Analyzing and Improving Machine Learning Model Performance Using Explainable AI Techniques" focuses on enhancing the effectiveness ...

Applying Machine Learning Algorithms for Predicting Stock Market Trends...

The project topic "Applying Machine Learning Algorithms for Predicting Stock Market Trends" revolves around the application of cutting-edge machine le...

Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems...

The project topic, "Application of Machine Learning for Predictive Maintenance in Industrial IoT Systems," focuses on the integration of machine learn...

Anomaly Detection in Internet of Things (IoT) Networks using Machine Learning Algori...

Anomaly detection in Internet of Things (IoT) networks using machine learning algorithms is a critical research area that aims to enhance the security and effic...

Anomaly Detection in Network Traffic Using Machine Learning Algorithms...

Anomaly detection in network traffic using machine learning algorithms is a crucial aspect of cybersecurity that aims to identify unusual patterns or behaviors ...

Predictive maintenance using machine learning algorithms...

Predictive maintenance is a proactive maintenance strategy that aims to predict equipment failures before they occur, thereby reducing downtime and maintenance ...